Most downloaded PACMPL paper of 2025

You can find the list of most-downloaded PACMPL papers in 2025 here: https://dl.acm.org/journal/pacmpl/announcements

You can find the list of most-downloaded PACMPL papers in 2025 here: https://dl.acm.org/journal/pacmpl/announcements

Researchers from the Max Planck Institute for Software Systems (MPI-SWS) have authored a total of 5 papers accepted to POPL 2026. This is the ninth year in a row that MPI-SWS researchers have published 5+ papers in POPL.

Congratulations to all our POPL authors!

Researchers from the Max Planck Institute for Software Systems (MPI-SWS) have authored a total of 5 papers accepted to POPL 2026. This is the ninth year in a row that MPI-SWS researchers have published 5+ papers in POPL.

Congratulations to all our POPL authors!

New study shows that humans and large language models respond surprisingly similarly to confusing program code

Researchers from Saarland University and the Max Planck Institute for Software Systems have, for the first time, shown that the reactions of humans and large language models (LLMs) to complex or misleading program code significantly align, by comparing brain activity of study participants with model uncertainty. Building on this, the team developed a data-driven method to automatically detect such confusing areas in code — a promising step toward better AI assistants for software development.

...New study shows that humans and large language models respond surprisingly similarly to confusing program code

Researchers from Saarland University and the Max Planck Institute for Software Systems have, for the first time, shown that the reactions of humans and large language models (LLMs) to complex or misleading program code significantly align, by comparing brain activity of study participants with model uncertainty. Building on this, the team developed a data-driven method to automatically detect such confusing areas in code — a promising step toward better AI assistants for software development.

The team led by Sven Apel, Professor of Software Engineering at Saarland University and Dr. Mariya Toneva, a faculty member at the Max Planck Institute for Software Systems and head of the research group Bridging AI and Neuroscience, investigated how humans and large language models respond to confusing program code. The characteristics of such code, known as atoms of confusion, are well studied: They are short, syntactically correct programming patterns that are misleading for humans and can throw even experienced developers off track.

To find out whether LLMs and humans “think” about the same stumbling blocks, the research team used an interdisciplinary approach: On the one hand, they used data from an earlier study by Apel and colleagues, in which participants read confusing and clean code variants while their brain activity and attention were measured using electroencephalography (EEG) and eye tracking. On the other hand, they analyzed the “confusion” or model uncertainty of LLMs using so-called perplexity values. Perplexity is an established metric for evaluating language models by quantifying their uncertainty in predicting sequences of text tokens based on their probability.

The result: Wherever humans got stuck on code, the LLM also showed increased perplexity. EEG signals from participants—especially the so-called late frontal positivity, which in language research is associated with unexpected sentence endings—rose precisely where the language model’s uncertainty spiked. “We were astounded that the peaks in brain activity and model uncertainty showed significant correlations,” says Youssef Abdelsalam, who was advised by Toneva and Apel and was instrumental in conducting the study as part of his doctoral studies.

Based on this similarity, the researchers developed a data-driven method that automatically detects and highlights unclear parts of code. In more than 60 percent of cases, the algorithm successfully identified known, manually annotated confusing patterns in the test code and even discovered more than 150 new, previously unrecognized patterns that also coincided with increased brain activity.

“With this work, we are taking a step toward a better understanding of the alignment between humans and machines,” says Max Planck researcher Mariya Toneva. “If we know when and why LLMs and humans stumble in the same places, we can develop tools that make code more understandable and significantly improve human–AI collaboration,” adds Professor Sven Apel.

Through their project, the researchers are building a bridge between neuroscience, software engineering, and artificial intelligence. The study, currently published as a preprint, was accepted for publication at the International Conference on Software Engineering (ICSE), one of the world’s leading conferences in the field of software development. The conference will take place in Rio de Janeiro in April 2026. The authors of the study are: Youssef Abdelsalam, Norman Peitek, Anna-Maria Maurer, Mariya Toneva, and Sven Apel.

Researchers from the Max Planck Institute for Software Systems (MPI-SWS) and the Max Planck Institute for Security and Privacy (MPI-SP) have authored a total of 9 papers accepted to POPL 2025. This is the eighth year in a row that MPI-SWS researchers have published 5+ papers in POPL. Furthermore, as of this year, MPI-SWS faculty member Derek Dreyer has published 25 papers at POPL----a new record!

Congratulations to all our POPL authors!

...Researchers from the Max Planck Institute for Software Systems (MPI-SWS) and the Max Planck Institute for Security and Privacy (MPI-SP) have authored a total of 9 papers accepted to POPL 2025. This is the eighth year in a row that MPI-SWS researchers have published 5+ papers in POPL. Furthermore, as of this year, MPI-SWS faculty member Derek Dreyer has published 25 papers at POPL----a new record!

Congratulations to all our POPL authors!

So-called ‘discrete dynamical systems’ form the basis for key computational challenges in a variety of fields, from program analysis and computer-aided verification to artificial intelligence and theoretical biology. Creating algorithmic solutions to make these systems amenable to automated verification techniques remains a major challenge. Researchers at the Max Planck Institute for Software Systems in Saarbrücken and the French ‘Centre National de la Recherche Scientifique’ are now working on advancing this automated verification approach with substantial funding by the European Research Council.

...So-called ‘discrete dynamical systems’ form the basis for key computational challenges in a variety of fields, from program analysis and computer-aided verification to artificial intelligence and theoretical biology. Creating algorithmic solutions to make these systems amenable to automated verification techniques remains a major challenge. Researchers at the Max Planck Institute for Software Systems in Saarbrücken and the French ‘Centre National de la Recherche Scientifique’ are now working on advancing this automated verification approach with substantial funding by the European Research Council.

The interdisciplinary project, titled ‘Dynamical and Arithmetical Model Checking (DynAMiCs)’, is led by the three principal investigators—Professor Joël Ouaknine, Scientific Director at the Max Planck Institute for Software Systems (MPI-SWS) in Saarbrücken; Professor Florian Luca, also MPI-SWS and Stellenbosch University in South Africa; and Professor Valérie Berthé from the French ‘Institut de recherche en informatique fondamentale’ at the ‘Centre National de la Recherche Scientifique (CNRS)’ at the University Paris Cité.

“The paradigm of ‘model checking’ is a powerful method that allows us to automatically verify, with mathematical certainty, whether a system behaves as intended” says Prof. Joël Ouaknine. However, many discrete dynamical systems—systems that change over time following specific rules—cannot currently be verified with the available model-checking approaches.

One of the leading objectives of this new research project is thus to substantially broaden the classes of dynamical systems and properties that can be algorithmically handled via model checking. Specifically, it aims to tackle longstanding mathematical challenges, such as the Skolem Problem, that could lead to breakthroughs in understanding and verifying the behavior of other complex systems.

The Skolem Problem asks if, within a system following specific mathematical rules, a certain state will eventually be reached. Translated to other instances, this question could ask if a computer program will terminate under specific conditions, when an electric vehicle’s battery will fully deplete following a specific driving pattern, or if a manufacturing robot will reach a precise target after following programmed movements. Although seemingly simple, this mathematical problem has remained unsolved and has puzzled mathematicians and computer scientists alike for nearly a century.

Besides the Skolem Problem, the project also addresses additional longstanding mathematical challenges such as the Pisot Conjecture, piecewise-affine map reachability, and the Periodicity Conjecture. Addressing these complex problems demands innovative approaches that integrate insights from multiple areas of mathematics and computer science. “This funding allows us to leverage the interdisciplinary synergies between different fields, bringing together expertise that might not otherwise have come together,” says Florian Luca. The DynAMiCs project unites expertise in algorithmic verification led by Prof. Joël Ouaknine, symbolic dynamics under Prof. Valérie Berthé, and analytic number theory led by Prof. Florian Luca.

The project is funded via a European Research Council (ERC) Synergy Grant totaling 7.5 million euros over six years, with 5 million euros allocated to MPI-SWS. ERC Grants are among the most prestigious research awards globally, with Synergy Grants being especially competitive and offering the highest funding levels. In the current funding round, 548 proposals were submitted, of which only 57 were approved (10.4%).

Press Release by the European Research Council: https://erc.europa.eu/news-events/news/erc-2024-synergy-grants-results

List of Selected Projects: https://erc.europa.eu/sites/default/files/2024-11/erc-2024-syg-results-all-domains.pdf

Professor Joël Ouaknine, PhD Scientific Director at MPI SWS and Coordinating Principal Investigator of DynAMiCs Max Planck Institute for Software Systems, Saarbrücken Tel: +49 (0)681 9303 9701 E-Mail: joel@mpi-sws.org

Philipp Zapf-Schramm Max Planck Institute for Informatics Tel: +49 681 9325 5409 E-Mail: pzs@mpi-inf.mpg.de

Researchers from the Max Planck Institute for Software Systems (MPI-SWS) and the Max Planck Institute for Security and Privacy (MPI-SP) have authored a total of 7 papers accepted to POPL 2024. This is the seventh year in a row that MPI-SWS researchers have published 5+ papers in POPL. Congratulations to all our POPL authors!

Researchers from the Max Planck Institute for Software Systems (MPI-SWS) and the Max Planck Institute for Security and Privacy (MPI-SP) have authored a total of 7 papers accepted to POPL 2024. This is the seventh year in a row that MPI-SWS researchers have published 5+ papers in POPL. Congratulations to all our POPL authors!

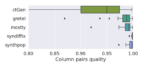

Paul Francis's Open Diffix project has released SynDiffix, an open-source Python package that generates statistically accurate, privacy preserving synthetic data from structured data. Using SynDiffix, a data owner can safely share data while retaining most of the statistical properties of the original data. Analysts can work with the synthetic data as though it were the original.

Using the novel techniques of sticky noise and range snapping,

...Paul Francis's Open Diffix project has released SynDiffix, an open-source Python package that generates statistically accurate, privacy preserving synthetic data from structured data. Using SynDiffix, a data owner can safely share data while retaining most of the statistical properties of the original data. Analysts can work with the synthetic data as though it were the original.

Using the novel techniques of sticky noise and range snapping, SynDiffix breaks new ground in data accuracy. It is 10 to 100 times more accurate than the open source tool CTGAN, and 5 to 10 times more accurate than the best commercial synthetic data generators. This makes SynDiffix particularly well suited to descriptive analytics like histograms, heatmaps, averages and standard deviations, column correlations, and so on. Like other tools, however, it can also be used for ML modeling. Francis is hopeful that SynDiffix will find wide practical use as well as motivate more research on synthetic data.

At ICALP 2023 and LICS 2023, two of the top conferences in logic and automata, there will be 6 papers by SWS researchers.

LICS 2023 (accepted papers: https://lics.siglog.org/lics23/accepted.php )

At ICALP 2023 and LICS 2023, two of the top conferences in logic and automata, there will be 6 papers by SWS researchers.

LICS 2023 (accepted papers: https://lics.siglog.org/lics23/accepted.php )

ICALP 2023 (accepted papers: https://icalp2023.cs.upb.de/accepted-papers/ )

Researchers from the Max Planck Institute for Software Systems (MPI-SWS) and the Max Planck Institute for Security and Privacy (MPI-SP) have authored a total of 6 papers accepted to POPL 2023. This is the sixth year in a row that MPI-SWS researchers have published 5+ papers in POPL. Furthermore, one Max Planck paper was awarded a 2023 POPL Distinguished Paper Award. Congratulations to all our POPL authors!

Researchers from the Max Planck Institute for Software Systems (MPI-SWS) and the Max Planck Institute for Security and Privacy (MPI-SP) have authored a total of 6 papers accepted to POPL 2023. This is the sixth year in a row that MPI-SWS researchers have published 5+ papers in POPL. Furthermore, one Max Planck paper was awarded a 2023 POPL Distinguished Paper Award. Congratulations to all our POPL authors!

Researchers from MPI-SWS have authored a total of 11 papers accepted to POPL 2022 (just under 17% of all accepted papers). This is the fifth year in a row that MPI-SWS researchers have published 5+ papers in POPL. Furthermore, two Max Planck papers were awarded a 2022 POPL Distinguished Paper Award. Congratulations to all our POPL authors!

Researchers from MPI-SWS have authored a total of 11 papers accepted to POPL 2022 (just under 17% of all accepted papers). This is the fifth year in a row that MPI-SWS researchers have published 5+ papers in POPL. Furthermore, two Max Planck papers were awarded a 2022 POPL Distinguished Paper Award. Congratulations to all our POPL authors!

MPI-SWS faculty member Elissa Redmiles, along with collaborators Samuel Dooley and Professor John Dickerson from the University of Maryland as well as Professor Dana Turjeman from Reichman University, helped the Louisiana Department of Health advertise their COVID19 contact tracing app. As part of this work, the researchers conducted a randomized, controlled field experiment to provide guidance to other jurisdictions on how to most effectively and ethically advertise these public health tools.The Linux Public Health Foundation has featured their findings as guidance for other jurisdictions looking to advertise their contact tracing apps.

...MPI-SWS faculty member Elissa Redmiles, along with collaborators Samuel Dooley and Professor John Dickerson from the University of Maryland as well as Professor Dana Turjeman from Reichman University, helped the Louisiana Department of Health advertise their COVID19 contact tracing app. As part of this work, the researchers conducted a randomized, controlled field experiment to provide guidance to other jurisdictions on how to most effectively and ethically advertise these public health tools.The Linux Public Health Foundation has featured their findings as guidance for other jurisdictions looking to advertise their contact tracing apps. This work is part of a larger project Redmiles leads on ethical adoption of COVID 19 apps: https://covidadoptionproject.mpi-sws.org/.

MPI-SWS faculty member Elissa Redmiles was quoted in Rolling Stone, El Pais -- Spain's largest newspaper -- and in NetzPolitik regarding ongoing research in collaboration with MPI-SWS group member Vaughn Hamilton and MPI-SWS Intern Hanna Barakat (also at Brown University) on the shift toward digital sex work as a result of COVID19, as well as her work with collaborators Catherine Barwulor (Clemson University), Allison McDonald (University of Michigan),

...MPI-SWS faculty member Elissa Redmiles was quoted in Rolling Stone, El Pais -- Spain's largest newspaper -- and in NetzPolitik regarding ongoing research in collaboration with MPI-SWS group member Vaughn Hamilton and MPI-SWS Intern Hanna Barakat (also at Brown University) on the shift toward digital sex work as a result of COVID19, as well as her work with collaborators Catherine Barwulor (Clemson University), Allison McDonald (University of Michigan), and Eszter Hargittai (University of Zurich) on digital discrimination against European sex workers, originally published in ACM CHI.

Ralf Jung's doctoral dissertation on "Understanding and Evolving the Rust Programming Language" has received the ETAPS Doctoral Dissertation Award for 2021. The award is given to the PhD student who has made the most original and influential contribution to the research areas in the scope of the ETAPS conferences, and has graduated in 2021 at a European academic institution. Ralf was advised by MPI-SWS faculty member Derek Dreyer.

A committee of international experts evaluated candidate dissertations with respect to originality,

...Ralf Jung's doctoral dissertation on "Understanding and Evolving the Rust Programming Language" has received the ETAPS Doctoral Dissertation Award for 2021. The award is given to the PhD student who has made the most original and influential contribution to the research areas in the scope of the ETAPS conferences, and has graduated in 2021 at a European academic institution. Ralf was advised by MPI-SWS faculty member Derek Dreyer.

A committee of international experts evaluated candidate dissertations with respect to originality, relevance, and impact to the field, as well as the quality of writing. The committee found that Dr. Ralf Jung's dissertation is very well-written and makes several highly original contributions in the area of programming language semantics and verification. The committee was also particularly impressed by the dissertation for its technical depth, the quality and quantity of the associated published work, as well as its relevance and impact both in academia and industry.

Ralf's work on Rust was also featured in a recent Communications of the ACM article: Safe Systems Programming in Rust by Ralf Jung, Jacques-Henri Jourdan, Robbert Krebbers, and Derek Dreyer. The article appeared in the April 2021 issue of CACM, together with a short video about this work produced by ACM.

Researchers from the Max Planck Institute for Software Systems (MPI-SWS) and the new Max Planck Institute for Security and Privacy (MPI-SP) have authored a total of 8 papers accepted to POPL 2021 (over 10% of all accepted papers). This is the fourth year in a row that MPI-SWS researchers have published 5+ papers in POPL. Furthermore, two Max Planck papers were awarded a Distinguished Paper Award. Congratulations to all our POPL authors!

...Researchers from the Max Planck Institute for Software Systems (MPI-SWS) and the new Max Planck Institute for Security and Privacy (MPI-SP) have authored a total of 8 papers accepted to POPL 2021 (over 10% of all accepted papers). This is the fourth year in a row that MPI-SWS researchers have published 5+ papers in POPL. Furthermore, two Max Planck papers were awarded a Distinguished Paper Award. Congratulations to all our POPL authors!

MPI-SWS papers:

MPI-SP papers:

Ever since the birth of Artificial Intelligence (AI) at the Dartmouth workshop in 1956, researchers have debated about the exact role that AI will play, and should play, in society. While some have envisioned a romanticized version of AI, incorporated into the narratives of 20th century movies, successful AI developments are often closer to J. C. R. Licklider’s vision of AI, which puts an emphasis on a collaborative relationship between humans and AI,

...Ever since the birth of Artificial Intelligence (AI) at the Dartmouth workshop in 1956, researchers have debated about the exact role that AI will play, and should play, in society. While some have envisioned a romanticized version of AI, incorporated into the narratives of 20th century movies, successful AI developments are often closer to J. C. R. Licklider’s vision of AI, which puts an emphasis on a collaborative relationship between humans and AI, and focuses on hybrid human-AI decision making.

In the Multi-Agent Systems group at MPI-SWS, we study multi-agent sequential decision making using formal frameworks that can capture nuances often presented in human-AI collaborative settings. Specifically, we study different aspects of agent-to-agent interaction in settings where agents share a common goal, but can have different perceptions of reality. The overall goal is to design a more effective AI decision maker that accounts for the behavior of its collaborators, and compensates for their imperfections. To achieve this goal, the AI decision maker can use steering policies to nudge its collaborators to adopt better policies, i.e., policies that lead to an improved joint outcome. In what follows, we summarize some of our recent results related to this agenda.

Accounting for misaligned world-views. An effective way to model behavioral differences between humans and modern AI tools (based on machine learning) is through a model that captures the misalignment in how the agents perceive their environment. Using this approach, we have proposed a new computational model, called Multi-View Decision Process, suitable for modeling two-agent cooperative scenarios in which agents agree on their goals, but disagree on how their actions affect the state of the world [1]. This framework enables us to formally analyze the utility of accounting for the misalignment in agents’ world-views when only one of the agents has a correct model of the world. Our results show that modeling such a misalignment is not only beneficial, but critical. The main takeaway is that to facilitate a more successful collaboration among agents, it is not sufficient to make one agent (more) accurate in its world-view: naively improving the accuracy of one agent can degrade the joint performance unless one explicitly accounts for the imperfections of the other agent. To this end, we have developed an algorithm for finding an approximately optimal steering policy for the agent with the correct world-view.

Adapting to a non-stationary collaborator. In addition to accounting for a misalignment in world-views, decision makers must also account for the effects of their behavior on other agents. Namely, decision makers respond to each other's behavior, leading to behavior which is non-stationary and changes over time. In the context of human-AI collaboration, this might happen if the human agent changes their behavior over time, for example, as it learns to interact with the AI agent. Such non-stationary behavior of the human agent could have a negative impact on the collaboration, and can lead to a substantially worse performance unless the AI agent adapts to the changing behavior of the human agent. We can model this situation with a two-agent setting similar to the one presented above, but which allows agents to change their behavior as they interact over time [2]. The agent with the correct world-view now has to adapt to the non-stationary behavior of its collaborator. We have proposed a learning procedure that has provable guarantees on the joint performance under the assumption that the behavior of the other agent is not abruptly changing over time. We have shown that this assumption is not trivial to relax in that obtaining the same guarantees without this assumption would require solving a computationally intractable problem.

Steering via environment design. The previous two cases consider indirect steering policies for which the agent with the correct model implicitly influences the behavior of its collaborator by acting in the world. A more explicit influence would be obtained if the actions of this agent are directly changing the world-view of its collaborator. In the context of human-AI collaboration, the AI agent could shape the environment to nudge the human agent to adopt a more efficient decision policy. This can be done through reward shaping, i.e., by making some actions more costly for humans in terms of effort, or through dynamics shaping, i.e., by changing the perceived influence that the human’s actions have on the world. In the machine learning terminology, such a steering strategy is nothing else but a form of an adversarial attack of the AI agent (attacker) on the human agent. In our recent work [3], we have characterized how to optimally perform these types of attacks and how costly they are from an attacker’s point of view.

References:

[1] Dimitrakakis, C., Parkes, D.C., Radanovic, G. and Tylkin, P., 2017. Multi-view Decision Processes: The Helper-AI Problem. In Advances in Neural Information Processing Systems.

[2] Radanovic, G., Devidze, R., Parkes, D. and Singla, A., 2019. Learning to Collaborate in Markov Decision Processes. In International Conference on Machine Learning.

[3] Rakhsha, A., Radanovic, G., Devidze, R., Zhu, X. and Singla, A., 2020. Policy Teaching via Environment Poisoning: Training-time Adversarial Attacks against Reinforcement Learning. In International Conference on Machine Learning.

Researchers from the Max Planck Institute for Software Systems (MPI-SWS), the Max Planck Institute for Informatics (MPI-INF), and the Max Planck Institute for Security and Privacy (MPI-SP) have coauthored 17 papers at the colocated LICS 2020 and ICALP 2020, two of the top conferences in theoretical computer science. LICS is the premier conference on logic in computer science and ICALP is the flagship conference of the European Association for Theoretical Computer Science.

...Researchers from the Max Planck Institute for Software Systems (MPI-SWS), the Max Planck Institute for Informatics (MPI-INF), and the Max Planck Institute for Security and Privacy (MPI-SP) have coauthored 17 papers at the colocated LICS 2020 and ICALP 2020, two of the top conferences in theoretical computer science. LICS is the premier conference on logic in computer science and ICALP is the flagship conference of the European Association for Theoretical Computer Science.

MPI-SWS papers:

MPI-INF papers:

MPI-SP papers:

Due to the impressive advances in Machine Learning and the unlimited availability of data, neural networks are rapidly becoming prevalent in our everyday lives, for instance by assisting in image-classification or decision-making tasks. As a result, there is growing concern regarding the reliability of neural networks in performing these tasks. In particular, it could be disastrous if an autonomous vehicle misclassifies a street sign, or if a recidivism-risk algorithm, which predicts whether a criminal is likely to re-offend,

...Due to the impressive advances in Machine Learning and the unlimited availability of data, neural networks are rapidly becoming prevalent in our everyday lives, for instance by assisting in image-classification or decision-making tasks. As a result, there is growing concern regarding the reliability of neural networks in performing these tasks. In particular, it could be disastrous if an autonomous vehicle misclassifies a street sign, or if a recidivism-risk algorithm, which predicts whether a criminal is likely to re-offend, is unfair with respect to race.

In the Practical Formal Methods group at MPI-SWS, we have recently focused on applying techniques from Software Engineering, including static analysis and test generation, to validate and verify properties of neural networks, such as robustness and fairness. In the following, we give a brief overview of three research directions we have been pursuing in this setting.

Blackbox Fuzzing of Neural Networks

By now, it is well known that even very subtle perturbations of a correctly classified image, such as a street sign, could cause a neural network to classify the new image differently. Such perturbed images are referred to as adversarial inputs and pose a critical threat to important applications of Machine Learning, like autonomous driving.

In our group, we recently developed DeepSearch [1], a blackbox-fuzzing technique that generates adversarial inputs for image-classification neural networks. Starting from a correctly classified image, DeepSearch strategically mutates its pixels such that the resulting image is more likely to be adversarial. By using spatial regularities of images, DeepSearch is able to generate adversarial inputs, while only querying the neural network very few times, which entails increased performance of our technique. Moreover, through a refinement step, DeepSearch further reduces the already subtle pixel perturbations of an adversarial input.

Adversarial-Input Detection for Neural Networks

To protect neural networks against adversarial inputs, we have developed RAID [2], a runtime-monitoring technique for detecting whether an input to a neural network is adversarial. Our technique consists of training a secondary classifier to identify differences in neuron activation values between correctly classified and adversarial inputs. RAID is effective in detecting adversarial inputs across a wide range of adversaries even when it is completely unaware of the type of adversary. In addition, we show that there is a simple extension to RAID that allows it to detect adversarial inputs even when these are generated by an adversary that has access to our detection mechanism.

Fairness Certification of Neural Networks

Several studies have recently raised concerns about the fairness of neural networks. To list a few examples, commercial recidivism-risk and health-care systems have been found to be racially biased. There is also empirical evidence of gender bias in image searches, for instance when searching for “CEO”. And facial-recognition systems, which are increasingly used in law enforcement, have been found biased with respect to both gender and race. Consequently, it is critical that we design tools and techniques for certifying fairness of neural networks or characterizing their bias.

We make an important step toward meeting these needs by designing the LIBRA static-analysis framework [3] for certifying causal fairness of neural networks used for classification of tabular data. In particular, given input features considered sensitive to bias, a neural network is causally fair if its output classification is not affected by different values of the sensitive features. On a high level, our approach combines a forward and a backward static analysis. The forward pass aims to divide the input space into independent partitions such that the backward pass is able to effectively determine fairness of each partition. For the partitions where certification succeeds, LIBRA provides definite (in contrast to probabilistic) fairness guarantees; otherwise, it describes the input space for which bias occurs. We have designed this approach to be sound and configurable with respect to scalability and precision, thus enabling pay-as-you-go fairness certification.

References

[1] Fuyuan Zhang, Sankalan Pal Chowdhury and Maria Christakis. DeepSearch: Simple and Effective Blackbox Fuzzing of Deep Neural Networks. CoRR abs/1910.06296, 2019.

[2] Hasan Ferit Eniser, Maria Christakis and Valentin Wüstholz. RAID: Randomized Adversarial-Input Detection for Neural Networks. CoRR abs/2002.02776, 2020.

[3] Caterina Urban, Maria Christakis, Valentin Wüstholz and Fuyuan Zhang. Perfectly Parallel Fairness Certification of Neural Networks. CoRR abs/1912.02499, 2019.

The following three MPI-SWS papers have been accepted to AAAI 2020, one of the flagship conferences in artificial intelligence:

On January 9, 2020, MPI-SWS faculty member Paul Francis published the article Dear Differential Privacy: Put Up or Shut Up, on Medium. The article, which has now reached 1000 views, describes the failure of Differential Privacy as the basis for data protection in the Facebook / Social Sciences One project.

The Facebook / Social Sciences One project is an attempt to release Facebook data on URL sharing to researchers so as to better understand the role of Facebook in influencing elections.

...On January 9, 2020, MPI-SWS faculty member Paul Francis published the article Dear Differential Privacy: Put Up or Shut Up, on Medium. The article, which has now reached 1000 views, describes the failure of Differential Privacy as the basis for data protection in the Facebook / Social Sciences One project.

The Facebook / Social Sciences One project is an attempt to release Facebook data on URL sharing to researchers so as to better understand the role of Facebook in influencing elections. The project raised 11 million dollars from private funders, and research grants were awarded to twelve research teams around the world. Facebook decided to use Differential Privacy as the means of anonymizing the data. After one year, however, Facebook had not supplied the data. When the funders threatened to pull the funding, Facebook did release a dataset, but the quality of the data was so poor that the proposed research could not be done.

Francis' article describes how and why the data release failed, discusses the shortcomings of Differential Privacy, and calls on the privacy research community to expand the scope of what passes for valid data anonymity research.

Software systems have become ubiquitous in our modern world and, consequently, so have bugs and glitches. While many software failures are harmless and often merely annoying, some can have catastrophic consequences. Just imagine the dire results of an autonomous car failing to stop at a red traffic light or a plane's control system becoming unresponsive during takeoff or landing.

In our research, we address these problems and develop intelligent tools that help engineers to build safe and reliable hardware,

...Software systems have become ubiquitous in our modern world and, consequently, so have bugs and glitches. While many software failures are harmless and often merely annoying, some can have catastrophic consequences. Just imagine the dire results of an autonomous car failing to stop at a red traffic light or a plane's control system becoming unresponsive during takeoff or landing.

In our research, we address these problems and develop intelligent tools that help engineers to build safe and reliable hardware, software, and cyber-physical systems. To this end, we employ a unique and promising strategy, which has recently also regained major attention in the artificial intelligence community: combining inductive techniques from the area of machine learning and deductive techniques from the area of mathematical logic (e.g., see the recent Dagstuhl seminar on "Logic and Learning", which was co-organized by one of our group members). Specifically, our research revolves around three topics, to which the remainder of this article is devoted: verification, synthesis, and formal specification languages.

Verification is an umbrella term referring to tools and techniques that formally prove that a given system satisfies its specification. In the context of software, a popular approach is deductive verification. The idea is easy to describe: first, the given program is augmented with annotations (typically loop invariants, pre-/post-conditions of method calls, and shape properties of data structures), which capture the developer's intent and facilitate the deductive reasoning in a later step; second, the program, together with its annotations, is translated into formulas in a suitable logic, called verification conditions; third, the verification conditions are checked for validity using automated theorem provers. Thanks to brilliant computer scientists, such as Edsger Dijkstra and Tony Hoare, as well as recent advances in constraint solving, the latter two steps can be (almost) entirely automated. However, the first step still remains a manual, error-prone task that requires significant training, experience, and ingenuity. In fact, this is one of the main obstacles preventing a widespread adaptation of formal verification in practice.

To also automate the challenging first step, we have developed a novel approach, called ICE learning [1], which intertwines inductive and deductive reasoning. The key idea is to pit a (deductive) program verifier against an (inductive) learning algorithm, whose goal is to infer suitable annotations from test-runs of the program and failed verification attempts. The actual learning proceeds in rounds. In each round, the learning algorithm proposes candidate annotations based on the data it has gathered so far. The program verifier then tries to prove the program correct using the proposed annotations. If the verification fails, the verifier reports data back to the learning algorithm explaining why the verification has failed. Based on this new information, the learning algorithm refines its conjecture and proceeds to the next round. The loop stops once the annotations are sufficient to prove the program correct.

ICE learning has proven to be a very powerful approach that allows fully automatic verification of a wide variety of programs, ranging from recursive and concurrent programs over numeric data types [1], to algorithms manipulating dynamically allocated data structures [2], to industry-size GPU kernels [3]. In addition, the principles underlying ICE learning can be lifted to other challenging verification tasks, such as the verification of parameterized systems [4] as well as—in ongoing research—to the verification of cyber-physical and AI-based systems. You might want to try a demo immediately in your browser.

Synthesis goes beyond verification and could be considered the holy grail of computer science. In contrast to checking whether a hand-crafted program meets its specification, the dream is to fully automatically generate software (or a circuit for that matter) from specifications in a correct-by-construction manner.

Although this dream is unrealistic in its whole generality, there exist various application domains in which automated synthesis techniques have been applied with great success. In our own research, for instance, we have developed techniques for synthesizing safety controllers for reactive, cyber-physical systems that have to interact with a complex–and perhaps only partially known–environment [5, 6]. Moreover, we have proposed a general framework for generating loop-free code from input-output examples and specifications written in first-order logic [7]. Similar to ICE learning, these methods combine inductive and deductive reasoning, thereby unveiling and exploiting synergies of modern machine learning algorithms and highly-optimized symbolic reasoning engines.

Both verification and synthesis rely on the ability to write correct formal specifications, which have to precisely capture the engineer’s intuitive understanding of the system in question. In practice, however, formalizing the requirements of a system is notoriously difficult, and it is well known that the use of standard formalisms such as temporal logics requires a level of sophistication that many users might never develop.

We have recently started a new research project to combat this serious obstacle. Its main objective is to design algorithms that learn formal specifications in interaction with human engineers. As a first step towards this goal, we have developed a learning algorithm for the specification language “Linear Temporal Logic (LTL)”, which is the de facto standard in many verification and synthesis applications. You might think of this algorithm as a recommender system for formal specifications: the human engineer provides examples of the desired and undesired behavior of the system in question, while the recommender generates a series of LTL specifications that are consistent with the given examples; the engineer can then either chose one of the generated specifications or provide additional examples and rerun the recommender.

In ongoing research, we are extending our learning algorithm to a wide range of other specification languages, including Computational Tree Logic, Signal Temporal Logic, and the Property Specification Language. Moreover, we are developing feedback mechanisms that allow for a tighter integration of the human engineer into the loop. Again, you can try our technology immediately in your browser.

[1] D’Souza, Deepak; Ezudheen, P.; Garg, Pranav; Madhusudan, P.; Neider, Daniel: Horn-ICE Learning for Synthesizing Invariants and Contracts. In: Proceedings of the ACM on Programming Languages (PACMPL), volume 2 issue OOPSLA, pages 131:1–131:25. ACM, 2018.

[2] Neider, Daniel; Madhusudan, P.; Garg, Pranav; Saha, Shambwaditya; Park, Daejun: Invariant Synthesis for Incomplete Verification Engines. In: 24th International Conference on Tools and Algorithms for the Construction and Analysis of Systems (TACAS 2018), volume 10805 of Lecture Notes in Computer Science, pages 232–250. Springer, 2018

[3] Neider, Daniel; Saha, Shambwaditya; Garg, Pranav; Madhusudan, P.: Sorcar: Property-Driven Algorithms for Learning Conjunctive Invariants. In: 26th International Static Analysis Symposium (SAS 2019), volume 11822 of Lecture Notes in Computer Science, pages 323–346. Springer, 2019

[4] Neider, Daniel; Jansen, Nils: Regular Model Checking Using Solver Technologies and Automata Learning. In: 5th International NASA Formal Method Symposium (NFM 2013), volume 7871 of Lecture Notes in Computer Science, pages 16–31. Springer, 2013

[5] Neider, Daniel; Topcu, Ufuk: An Automaton Learning Approach to Solving Safety Games over Infinite Graphs. In: 22nd International Conference on Tools and Algorithms for the Construction and Analysis of Systems (TACAS 2016), volume 9636 of Lecture Notes in Computer Science, pages 204–221. Springer, 2016

[6] Neider, Daniel; Markgraf, Oliver: Learning-based Synthesis of Safety Controllers. In: 2019 International Conference on Formal Methods in Computer Aided Design (FMCAD 2019), pages 120–128. IEEE, 2019

[7] Neider, Daniel; Saha, Shambwaditya; Madhusudan, P.: Synthesizing Piece-wise Functions by Learning Classifiers. In: 22nd International Conference on Tools and Algorithms for the Construction and Analysis of Systems (TACAS 2016), volume 9636 of Lecture Notes in Computer Science, pages 186–203. Springer, 2016

[8] Neider, Daniel; Gavran, Ivan: Learning Linear Temporal Properties. In: 2018 International Conference on Formal Methods in Computer Aided Design (FMCAD 2018), pages 148–157. IEEE, 2018

Researchers from the Max Planck Institute for Software Systems (MPI-SWS) and the new Max Planck Institute for Security and Privacy (MPI-SP) have authored a total of 8 papers accepted to POPL 2020 (over 10% of all accepted papers). This is the third year in a row that MPI-SWS researchers have published 5 papers in POPL. Furthermore, MPI-SWS faculty member Derek Dreyer is the first person ever to publish 4 papers in a single POPL.

...Researchers from the Max Planck Institute for Software Systems (MPI-SWS) and the new Max Planck Institute for Security and Privacy (MPI-SP) have authored a total of 8 papers accepted to POPL 2020 (over 10% of all accepted papers). This is the third year in a row that MPI-SWS researchers have published 5 papers in POPL. Furthermore, MPI-SWS faculty member Derek Dreyer is the first person ever to publish 4 papers in a single POPL. Congratulations to all our POPL authors!

MPI-SWS papers:

MPI-SP papers:

The following three MPI-SWS papers have been accepted to NeurIPS 2019, the flagship conference in machine learning:

MPI-SWS researchers, in collaboration with colleagues at TU Delft, the CISTER Research Centre at Polytechnic Institute of Porto, University of Saarland, Bosch Corporate Research, and Scuola Superiore Sant’Anna in Pisa, Italy, are proud to present three papers at this year's Euromicro Conference on Real-Time Systems (ECRTS) in Stuttgart, Germany. ECRTS is one of the three top-ranked conferences on real-time systems (according to Google Scholar Metrics, it is ranked number one).

MPI-SWS researchers, in collaboration with colleagues at TU Delft, the CISTER Research Centre at Polytechnic Institute of Porto, University of Saarland, Bosch Corporate Research, and Scuola Superiore Sant’Anna in Pisa, Italy, are proud to present three papers at this year's Euromicro Conference on Real-Time Systems (ECRTS) in Stuttgart, Germany. ECRTS is one of the three top-ranked conferences on real-time systems (according to Google Scholar Metrics, it is ranked number one).

Consider the everyday websites and apps that we use: online shops, news websites, search engines, social networks, navigation apps, instant messaging apps, and many more. Most of these programs don't just run in isolation on our laptops or phones, but instead connect over the internet to backends and databases running in datacenters across the world. These backends perform a wide range of tasks, including constructing your personalized social network feed, storing and retrieving comments on message boards,

...Consider the everyday websites and apps that we use: online shops, news websites, search engines, social networks, navigation apps, instant messaging apps, and many more. Most of these programs don't just run in isolation on our laptops or phones, but instead connect over the internet to backends and databases running in datacenters across the world. These backends perform a wide range of tasks, including constructing your personalized social network feed, storing and retrieving comments on message boards, and calculating results for your search query. From our perspective as users, the actions we perform are simple, such as opening the app and loading our personalized profile. But under the hood, each action usually results in complex processing across many processes and machines in a datacenter.

It has never been easier to write and deploy complex programs like these. Cloud computing companies who own datacenters (such as Google, Amazon, and Microsoft) will gladly rent out computer services at a touch of a button, on demand. Using designs like microservices, it is easy for programmers to construct complex programs out of smaller, simpler building blocks. There are frameworks and open-source software packages to help developers construct big applications out of small pieces, to spread those pieces out over multiple machines in a datacenter, and to have the pieces communicate and interact with each other over the network.

Problems show up when software goes live. Compared to developing and deploying the software, it is much harder to make sure everything goes smoothly when the software is up and running. Distributed computer programs have lots of moving pieces, and there are lots of opportunities for things to go wrong. For example, if one machine in the datacenter has a hardware problem, or the code is buggy, or too many people are trying to access it at once, the effects can be wide-ranging. It can create a butterfly effect of problems, which we term cascading failures, that can lead to the app or website as a whole becoming slow, or going down entirely. It's hard for programmers to get to the bottom of these kinds of problems, because there's no single machine or process doing all the work. A problem that occurs on one machine might manifest as strange symptoms on a different machine later on. Figuring out the root cause of a problem is challenging, as is anticipating problems in the first place. Even big internet companies like Facebook and Google experience problems like this today.

These kinds of problems motivate the research of the Cloud Software Systems Research Group at the Max Planck Institute for Software Systems. We research ways for operators to understand what's going on in their live distributed system, to troubleshoot problems when they occur at runtime, and to design systems that proactively avoid problems. One approach we take is to design distributed tracing tools that can be used by the system operators. The goal of distributed tracing is to record information about what a program does while it's running. The tools record events, metrics, and performance counters, which together expose the current state and performance of the system, and how it changes over time. A key additional step taken by distributed tracing tools is to record the causal ordering of events happening in the system — that is, the interactions and dependencies between machines and processes. Causal ordering is is very useful for diagnosing problems that span multiple processes and machines, especially when there might be lots of concurrent, unrelated activity going on at the same time. It lets us reconstruct the end-to-end execution paths of requests, across all components and machines, and then reason about the sequence of conditions and events that led up to a problem. Without causal ordering, this information is missing, and pinpointing the root cause of a problem would be like searching for a needle in a haystack.

The Cloud Software Systems Research Group has looked at a number of challenges in making distributed tracing tools efficient, scalable, and more widely deployable. In our recent work, we have thought about how you can efficiently insert instrumentation to record entirely new information, into an already-running system, without having to rebuild or restart the system [1]. We have looked at problems in dealing with the large volume of data generated by distributed tracing tools, and deciding which data is most valuable to keep if there's not enough room to keep it all [2]. We have also considered the implications of distributed tracing at extremely large scale, and how to efficiently collect, aggregate, and process tracing data in real-time [3].

In our ongoing work, we are investigating ways for the data recorded by tracing tools to feed back in to decisions made by datacenter infrastructure, such as resource management, scheduling, and load balancing. We are also considering new challenges that arise in scalable data analysis: how do you analyze large datasets of traces and derive insights about aggregate system behavior? One approach we are exploring uses techniques in representational machine learning, to transform richly annotated tracing data into a more tractable form for interactive analysis. More broadly, our group investigates a variety of approaches besides just distributed tracing tools, including ways to better design and develop the distributed systems in the first place. Ultimately, our goal is to make modern cloud systems easier to operate, understand, and diagnose.

References

[1] Jonathan Mace, Ryan Roelke, and Rodrigo Fonseca. Pivot Tracing: Dynamic Causal Monitoring for Distributed Systems. In Proceedings of the 25th ACM Symposium on Operating Systems Principles (SOSP '15), 2015.

[2] Pedro Las-Casas, Jonathan Mace, Dorgival Guedes, and Rodrigo Fonseca. Weighted Sampling of Execution Traces: Capturing More Needles and Less Hay. In Proceedings of the 9th ACM Symposium on Cloud Computing (SoCC'18), 2018.

[3] Jonathan Kaldor, Jonathan Mace, Michał Bejda, Edison Gao, Wiktor Kuropatwa, Joe O'Neill, Kian Win Ong, Bill Schaller, Pingjia Shan, Brendan Viscomi, Vinod Venkataraman, Kaushik Veeraraghavan, Yee Jiun Song. Canopy: An End-to-End Performance Tracing And Analysis System. In Proceedings of the 26th ACM Symposium on Operating Systems Principles (SOSP '17), 2017.

An article in the career guide of Nature titled "How Germany is winning at turning its research to commercial application" mentions the work of Paul Francis' group on anonymization and the spinoff Aircloak.

A long-standing problem both within research and in society generally is that of how to analyze data about people without risking the privacy of those people. There is an ever-growing amount of data about people: medical, financial, social, government, geo-location, etc. This data is very valuable in terms of better understanding ourselves. Unfortunately, analyzing the data in its raw form carries the risk of exposing private information about people.

The problem of how to analyze data while protecting privacy has been around for more than 40 years---ever since the first data processing systems were developed.

...A long-standing problem both within research and in society generally is that of how to analyze data about people without risking the privacy of those people. There is an ever-growing amount of data about people: medical, financial, social, government, geo-location, etc. This data is very valuable in terms of better understanding ourselves. Unfortunately, analyzing the data in its raw form carries the risk of exposing private information about people.

The problem of how to analyze data while protecting privacy has been around for more than 40 years---ever since the first data processing systems were developed. Most workable solutions are ad hoc: practitioners try things like removing personally identifying information (e.g. names and addresses), aggregating data, removing outlying data, and even swapping some data between individuals. This process can work reasonably well, but it is time-consuming, requires substantial expertise to get right, and invariably limits the accuracy of the analysis or the types of analysis that can be done.

A holy grail within computer science is to come up with an anonymization system that has formal guarantees of anonymity and provides good analytics. Most effort in this direction has focused on two ideas, K-anonymity and Differential Privacy. Both can provide strong anonymity, but except in rare cases neither can do so while still providing adequate analytics. As a result, common practice is still to use informal ad hoc techniques with weak anonymization, and to mitigate risk by for instance sharing data only with trusted partners.

The European Union has raised the stakes with the General Data Protection Regulation (GDPR). The GDPR has strict rules on how personal data may be used, and threatens large fines to organizations that do not follow the rules. However, GDPR says that if data is anonymous, then it is not considered personal and does not fall under the rules. Unfortunately, there are no precise guidelines on how to determine if data is anonymous or not. Member states are expected to come up with certification programs for anonymity, but do not know how to do so.

This is where we come in. Paul Francis' group, in research partnership with the startup Aircloak, has been developing an anonymizing technology called Diffix over the last five years. Diffix is an empirical, not a formal technology, and so the question remains "how anonymous is Diffix?" While it may not be possible to precisely answer that question, one way we try to answer that question is through a bounty program: we pay attackers who can demonstrate how to compromise anonymity in our system. Last year we ran the first (and still only) bounty program for anonymity. The program was successful in that some attacks were found, and in the process of designing defensive measures, Diffix has improved.

In order to run the bounty program, we naturally needed a measure of anonymity so that we could decide how much to pay attackers. We designed a measure based on how much confidence an attacker has in a prediction of an individual's data values, among other things. At some point, we realized that our measure applies not just to attacks on Diffix, but to any anonymization system. We also realized that our measure might be useful in the design of certification programs for anonymity.

We decided to develop a general score for anonymity, and to build tools that would allow anyone to apply the measure to any anonymization technology. The score is called the GDA Score, for General Data Anonymity Score.

The primary strength of the GDA Score is that it can be applied to any anonymization method, and therefore is apples-to-apples. The primary weakness is that it is based on empirical attacks (real attacks against real systems), and therefore the score is only as good as the attacks themselves. If there are unknown attacks on a system, then the score won't reflect this and may therefore make a system look more anonymous than it is.

Our hope is that over time enough attacks will be developed that we can have high confidence in the GDA Score. Towards that end, we've started the Open GDA Score Project. This is a community effort to provide software and databases in support of developing new attacks, and a repository where the scores can be viewed. We recently launched the project in the form of a website, www.gda-score.org, and some initial tools and simple attacks. We will continue to develop tools and new attacks, but our goal is to attract broad participation from the community.

For more information, visit www.gda-score.org.

The article "Enhancing Human Learning via spaced repetition optimization", coauthored by MPI-SWS and MPI-IS researchers, has been published in the Proceedings of the National Academy of Sciences (PNAS), a highly prestigious journal.

The (open-access) article can be found here: https://www.pnas.org/content/early/2019/01/18/1815156116.

What's more, the MPI-SWS Software Analysis and Verification group has a whole session to itself at POPL 2019. The weak memory session on Thursday, Jan 17, is comprised of the three papers by Viktor Vafeiadis, his students, postdocs, and collaborators.

The following five MPI-SWS papers have been accepted to NIPS 2018, the flagship conference in machine learning:

Many real-world systems involve repeatedly making decisions under uncertainty—for instance, choosing one of the several products to recommend to a user in an online recommendation service, or dynamically allocating resources among available stock options in a financial market. Machine learning (ML) algorithms driving these systems typically operate under the assumption that they are interacting with static components, e.g., users' preferences are fixed, trading tools providing stock recommendations are static, and data distributions are stationary. This assumption is often violated in modern systems,

...Many real-world systems involve repeatedly making decisions under uncertainty—for instance, choosing one of the several products to recommend to a user in an online recommendation service, or dynamically allocating resources among available stock options in a financial market. Machine learning (ML) algorithms driving these systems typically operate under the assumption that they are interacting with static components, e.g., users' preferences are fixed, trading tools providing stock recommendations are static, and data distributions are stationary. This assumption is often violated in modern systems, as these algorithms are increasingly interacting with and seeking information from learning agents including people, robots, and adaptive adversaries. Consequently, many well-studied ML frameworks and algorithmic techniques fail to provide desirable theoretical guarantees—for instance, algorithms might converge to a sub-optimal solution or fail arbitrarily bad in these settings.

Researchers at the Machine Teaching Group, MPI-SWS are designing novel ML algorithms that have to interact with agents that are adaptive or learning over time, especially in situations when the algorithm's decisions directly affect the state dynamics of these agents. In recent work [1], they have studied the above-mentioned problem in the context of two fundamental machine learning frameworks: (i) online learning using experts' advice and (ii) active learning using labeling oracles. In particular, they consider a setting where experts/oracles themselves are learning agents. For instance, active learning algorithms typically query labels from an oracle, e.g., a (possibly noisy) domain expert; however, in emerging crowd-powered systems, these experts are getting replaced by inexpert participants who could themselves be learning over time (e.g., volunteers in citizen science projects). They have shown that when these experts/oracles themselves are learning agents, well-studied algorithms (like the EXP3 algorithm) fail to converge to the optimal solution and can have arbitrarily bad performance for this new problem setting. Furthermore, they provide an impossibility result showing that without sharing any information across experts, it is impossible to achieve convergence guarantees. This calls for developing novel algorithms with practical ways of coordination between the central algorithm and learning agents to achieve desired guarantees.

Currently, researchers at the Machine Teaching Group are studying these challenges in the context of designing next-generation human-AI collaborative systems. As a concrete application setting, consider a car driving scenario where the goal is to develop an assistive AI agent to drive the car in an auto-pilot mode, but giving control back to the human driver in safety-critical situations. They study this setting by casting it as a multi-agent reinforcement learning problem. When the human agent has a stationary policy (i.e., the actions take by the human driver in different states/scenarios are fixed), it is trivial to learn an optimal policy for the AI agent that maximizes the overall performance of this collaborative system. However, in real-life settings where a human driver would adapt their behavior in response to the presence of an auto-pilot mode, they show that the problem of learning an optimal policy for the AI agent becomes computationally intractable. This work is one of the recent additions to an expanding set of results and algorithmic techniques developed by MPI-SWS researchers in the nascent area of Machine Teaching [2, 3].

[1] Adish Singla, Hamed Hassani, and Andreas Krause. Learning to Interact with Learning Agents. In Proceedings of the 32nd AAAI Conference on Artificial Intelligence (AAAI'18), 2018.

[2] Xiaojin Zhu, Adish Singla, Sandra Zilles, and Anna N. Rafferty. An Overview of Machine Teaching. arXiv 1801.05927, 2018.

[3] Maya Cakmak, Anna N. Rafferty, Adish Singla, Xiaojin Zhu, and Sandra Zilles. Workshop on Teaching Machines, Robots, and Humans. NIPS 2017.

Four papers to appear at OOPSLA'18 have been (co)-authored by MPI-SWS members.

Four papers to appear at OOPSLA'18 have been (co)-authored by MPI-SWS members.

Paul Francis was featured in an interview by CNIL, the French national data protection authority. The interview discusses the innovative way in which MPI-SWS is tackling the data anonymity problem. The interview follows Paul's visit to CNIL in May 2018, where he presented the first-ever bounty program for anonymity. The bounty program, designed by MPI-SWS and implemented by the startup Aircloak, is one of the innovative ways in which MPI-SWS develops practical data anonymity techniques.

...Paul Francis was featured in an interview by CNIL, the French national data protection authority. The interview discusses the innovative way in which MPI-SWS is tackling the data anonymity problem. The interview follows Paul's visit to CNIL in May 2018, where he presented the first-ever bounty program for anonymity. The bounty program, designed by MPI-SWS and implemented by the startup Aircloak, is one of the innovative ways in which MPI-SWS develops practical data anonymity techniques.

In 1937, a young Englishman by the name of Alan M. Turing published a paper with the obscure title "On computable numbers, with an application to the Entscheidungsproblem'' in the Proceedings of the London Mathematical Society. In doing so, he arguably laid the mathematical foundations of modern computer science. Turing's seminal contribution was to show that the famous Entscheidungsproblem, formulated by the great German mathematician David Hilbert several years earlier,

...In 1937, a young Englishman by the name of Alan M. Turing published a paper with the obscure title "On computable numbers, with an application to the Entscheidungsproblem'' in the Proceedings of the London Mathematical Society. In doing so, he arguably laid the mathematical foundations of modern computer science. Turing's seminal contribution was to show that the famous Entscheidungsproblem, formulated by the great German mathematician David Hilbert several years earlier, could not be solved: more precisely, Turing proved (in modern parlance) that the problem of determining whether a given computer program halts could not be done algorithmically---in other words that the famous Halting Problem is undecidable.

Although seemingly at the time a rather esoteric concern, the Halting Problem (and related questions) have dramatically gained in importance and relevance in more contemporary times. Fast forward to the 21st Century: nowadays, it is widely acknowledged that enabling engineers, programmers, and researchers to automatically verify and certify the correctness of the computer systems that they design is one of the Grand Challenges of computer science. In increasingly many instances, it is absolutely critical that the software governing various aspects of our daily lives (such as that running on an aircraft controller, for example) behave exactly as intended, lest catastrophic consequences ensue.

Researchers at the Foundations of Algorithmic Verification group are investigating what classes of infinite-state programs can, at least in principle, be fully handled and analyzed algorithmically by viewing computer programs abstractly as dynamical systems, and they seek to design exact algorithms enabling one to fully analyse the behaviour of such systems. In particular, they are presently tackling a range of central algorithmic problems from verification, synthesis, performance, and control for linear dynamical systems, drawing among others on tools from number theory, Diophantine geometry, and algebraic geometry, with the overarching goal of offering a systematic exact computational treatment of various important classes of dynamical systems and other fundamental models used in mathematics, computer science, and the quantitative sciences. Some of their achievements include several decidability and hardness results for linear recurrence sequences, which can be used to model simple loops in computer programs, answering a number of longstanding open questions in the mathematics and computer science literature.

In a series of recent papers [1, 2], they have attacked the so-called Zero Problem for linear differential equations, i.e., the question of determining algorithmically whether the unique solution to a given linear differential equation has a zero or not. Such equations, which go back as far as Newton, are ubiquitous in mathematics, physics, and engineering; they are also particularly useful to model cyber-physical systems, i.e., digital systems that evolve in and interact with a continuous environment. In their work, they obtained several important partial results: if one is interested in the existence of a zero over a bounded time interval, then it is possible to determine this algorithmically, provided that a certain hypothesis from the mathematical field of number theory, known as Schanuel's Conjecture, is true. They were also able to partially account for the fact that the Zero Problem has hitherto remained open in full generality: indeed, if one were able to solve it in dimension 9 (or higher), then in turn this would enable one to solve various longstanding hard open problems from a field of mathematics known as Diophantine approximation. In doing so, they therefore exhibited surprising and unexpected connections between the modelling and analysis of cyber-physical systems and seemingly completely unrelated deep mathematical theories dealing with questions about whole numbers.

[1] Ventsislav Chonev, Joel Ouaknine, and James Worrell. On recurrent reachability for continuous linear dynamical systems. In Proceedings of the 31st Annual ACM/IEEE Symposium on Logic in Computer Science (LICS), 2016.

[2] Ventsislav Chonev, Joel Ouaknine, and James Worrell. On the Skolem Problem for continuous linear dynamical systems. In Proceedings of the 43rd International Colloquium on Automata, Languages, and Programming (ICALP), 2016.

Four papers from MPI-SWS have been accepted to AAAI 2018:

Three papers from MPI-SWS have been accepted to the 2018 Web Conference:

In 2018, MPI-SWS researchers authored a total of five POPL papers:

In 2018, MPI-SWS researchers authored a total of five POPL papers:

Furthermore, with the "RustBelt" paper, MPI-SWS faculty member Derek Dreyer cements a 10-year streak of having at least one POPL paper each year, breaking the all-time record of 9 years previously held by John Mitchell at Stanford. Congratulations Derek!

Machine learning algorithms are increasingly being used to automate decision making in several domains such as hiring, lending and crime-risk prediction. These algorithms have shown significant promise in leveraging large or “big” training datasets to achieve high prediction accuracy, sometimes surpassing even human accuracy.

Unfortunately, some recent investigations have shown that machine learning algorithms can also lead to unfair outcomes. For example, a recent ProPublica study found that COMPAS,

...Machine learning algorithms are increasingly being used to automate decision making in several domains such as hiring, lending and crime-risk prediction. These algorithms have shown significant promise in leveraging large or “big” training datasets to achieve high prediction accuracy, sometimes surpassing even human accuracy.

Unfortunately, some recent investigations have shown that machine learning algorithms can also lead to unfair outcomes. For example, a recent ProPublica study found that COMPAS, a tool used in US courtrooms for assisting judges with crime risk prediction, was unfair towards black defendants. In fact, several studies from governments, regulatory authorities, researchers as well as civil rights groups have raised concerns about machine learning potentially acting as a tool for perpetuating existing unfair practices in society, and worse, introducing new kinds of unfairness in prediction tasks. As a consequence, a flurry of recent research has focused on defining and implementing appropriate computational notions of fairness for machine learning algorithms.

Existing computational notions of fairness in the machine learning literature are largely inspired by the concept of discrimination in social sciences and law. These notions require the decision outcomes to ensure parity (i.e. equality) in treatment and in impact.

Notions based on parity in treatment require that the decision algorithm should not take into account the sensitive feature information (e.g., gender, race) of a user. Notions based on parity in impact require that the decision algorithm should give beneficial decision outcomes (e.g., granting a loan) to similar percentages of people from all sensitive feature groups (e.g., men, women).

However, in many cases, these existing notions are too stringent and can lead to unexpected side effects. For example, ensuring parity has been shown to lead to significant reductions in prediction accuracy. Parity may also lead to scenarios where none of the groups involved in decision making (e.g., neither men nor women) get beneficial outcomes. In other words, these scenarios might be preferred neither by the decision maker using the algorithm (due to diminished accuracy), nor by the groups involved (due to very little benefits).

In recent work, to appear at NIPS 2017, researchers at MPI-SWS have introduced two new computational notions of algorithmic fairness: preferred treatment and preferred impact. These notions are inspired by ideas related to envy-freeness and bargaining problem in economics and game theory. Preferred treatment and preferred impact leverage these ideas to build more accurate solutions that are preferable for both the decision maker and the user groups.

The new notion of preferred treatment allows basing the decisions on sensitive feature information (thereby relaxing the parity treatment criterion) as long as the decision outcomes do not lead to envy. That is, each group of users prefers their own group membership over other groups and does not feel that presenting itself to the algorithm as another group would have led to better outcomes for the group.

The new notion of preferred impact allows differences in beneficial outcome rates for different groups (thereby relaxing the parity impact criterion) as long as all the groups get more beneficial outcomes than what they would have received under the parity impact criterion.

In their work, MPI-SWS researchers have developed a technique to ensure machine learning algorithms satisfy preferred treatment and / or preferred impact. They also tested their technique by designing crime-predicting machine-learning algorithms that satisfy the above-mentioned notions. In their experiments, they show that preference-based fairness notions can provide significant gains in overall decision-making accuracy as compared to parity-based fairness, while simultaneously increasing the beneficial outcomes for the groups involved.

This work is one of the most recent additions to an expanding set of techniques developed by MPI-SWS researchers to enable fairness, accountability and interpretability of machine learning algorithms.

Bilal Zafar, Isabel Valera, Manuel Gomez Rodriguez, Krishna Gummadi and Adrian Weller. From Parity to Preference: Learning with Cost-effective Notions of Fairness. Neural Information Processing Systems (NIPS), Long Beach (CA, USA), December 2017

The paper "Leveraging the Crowd to Detect and Reduce the Spread of Fake News and Misinformation " by MPI-SWS researchers, in collaboration with researchers at KAIST and MPI-IS, has been accepted to WSDM 2018, one of the flagship conferences in data mining.

WSDM will take place in Los Angeles (CA, USA) in February 2018.

The paper "From Parity to Preference: Learning with Cost-effective Notions of Fairness" by MPI-SWS researchers, in collaboration with researchers at the University of Cambridge and MPI-IS, has been accepted to NIPS 2017, the flagship conference in machine learning.

NIPS will take place in Long Beach (CA, USA) in December 2017.

The paper entitled "An Exact and Sustainable Analysis of Non-Preemptive Scheduling" by MPI-SWS researchers Mitra Nasri and Björn Brandenburg was accepted into the program of the 38th IEEE Real-Time Systems Symposium (RTSS 2017).

RTSS 2017 will be held from December 6 to December 8 in Paris, France.

The paper "A Broad View of the Ecosystem of Socially Engineered Exploit Documents" was accepted at NDSS '17 (Network and Distributed System Security Symposium). The authors include Stevens Le Blond, Cédric Gilbert, Utkarsh Upadhyay, and Manuel Gomez Rodriguez from MPI-SWS, as well as David Choffnes from Northeastern University.

Our understanding of exploit documents as a vector to deliver targeted malware is limited to a handful of studies done in collaboration with the Tibetans,

...The paper "A Broad View of the Ecosystem of Socially Engineered Exploit Documents" was accepted at NDSS '17 (Network and Distributed System Security Symposium). The authors include Stevens Le Blond, Cédric Gilbert, Utkarsh Upadhyay, and Manuel Gomez Rodriguez from MPI-SWS, as well as David Choffnes from Northeastern University.

Our understanding of exploit documents as a vector to deliver targeted malware is limited to a handful of studies done in collaboration with the Tibetans, Uyghurs, and political dissidents in the Middle East. In this measurement study, we present a complementary methodology relying only on publicly available data to capture and analyze targeted attacks with both greater scale and depth. In particular, we detect exploit documents uploaded over one year to a large anti-virus aggregator (VirusTotal) and then mine the social engineering information they embed to infer their likely targets and contextual information of the attacks. We identify attacks against two ethnic groups (Tibet and Uyghur) as well as 12 countries spanning America, Asia, and Europe. We then analyze the exploit documents dynamically in sandboxes to correlate and compare the exploited vulnerabilities and malware families targeting different groups. Finally, we use machine learning to infer the role of the uploaders of these documents to VirusTotal (i.e., attacker, targeted victim, or third-party), which enables their classification based only on their metadata, without any dynamic analysis. We make our datasets available to the academic community.

Five papers from MPI-SWS have been accepted to WWW 2017:

The 26th International World Wide Web Conference (WWW) will take place in Perth, Australia in April 2017.

Three papers from MPI-SWS were accepted to ACM POPL 2017:

Two papers from MPI-SWS were accepted to ACM WSDM 2017:

Two MPI-SWS papers were accepted into the program of the 24th International Conference on Real-Time Networks and Systems (RTNS 2016):

RTNS 2016 will be held from October 19 to October 21 in Brest, France.

MPI-SWS projects Aqua and Herd were discussed in an ArsTechnica article describing next generation anonymity networks.

Two papers of MPI-SWS researchers were accepted into the program of the 37th IEEE Real-Time Systems Symposium (RTSS 2016):

RTSS 2016 will be held from November 29 until December 2 in Porto, Portugal.

MPI-SWS faculty Cristian Danescu-Niculescu-Mizil's work on linguistic change was mentioned in The New York Times. This is joint work with Robert West, Dan Jurafsky, Jure Leskovec, Christopher Potts.

MPI-SWS faculty Cristian Danescu-Niculescu-Mizil has had his work on conversational threads in social media mentioned in The New York Times. This is joint work with Lars Backstrom, Jon Kleinberg and Lillian Lee.