Consider the everyday websites and apps that we use: online shops, news websites, search engines, social networks, navigation apps, instant messaging apps, and many more. Most of these programs don't just run in isolation on our laptops or phones, but instead connect over the internet to backends and databases running in datacenters across the world. These backends perform a wide range of tasks, including constructing your personalized social network feed, storing and retrieving comments on message boards, ...

Consider the everyday websites and apps that we use: online shops, news websites, search engines, social networks, navigation apps, instant messaging apps, and many more. Most of these programs don't just run in isolation on our laptops or phones, but instead connect over the internet to backends and databases running in datacenters across the world. These backends perform a wide range of tasks, including constructing your personalized social network feed, storing and retrieving comments on message boards, and calculating results for your search query. From our perspective as users, the actions we perform are simple, such as opening the app and loading our personalized profile. But under the hood, each action usually results in complex processing across many processes and machines in a datacenter.

It has never been easier to write and deploy complex programs like these. Cloud computing companies who own datacenters (such as Google, Amazon, and Microsoft) will gladly rent out computer services at a touch of a button, on demand. Using designs like microservices, it is easy for programmers to construct complex programs out of smaller, simpler building blocks. There are frameworks and open-source software packages to help developers construct big applications out of small pieces, to spread those pieces out over multiple machines in a datacenter, and to have the pieces communicate and interact with each other over the network.

Problems show up when software goes live. Compared to developing and deploying the software, it is much harder to make sure everything goes smoothly when the software is up and running. Distributed computer programs have lots of moving pieces, and there are lots of opportunities for things to go wrong. For example, if one machine in the datacenter has a hardware problem, or the code is buggy, or too many people are trying to access it at once, the effects can be wide-ranging. It can create a butterfly effect of problems, which we term

cascading failures, that can lead to the app or website as a whole becoming slow, or going down entirely. It's hard for programmers to get to the bottom of these kinds of problems, because there's no single machine or process doing all the work. A problem that occurs on one machine might manifest as strange symptoms on a different machine later on. Figuring out the root cause of a problem is challenging, as is anticipating problems in the first place. Even big internet companies like Facebook and Google experience problems like this today.

These kinds of problems motivate the research of the

Cloud Software Systems Research Group at the Max Planck Institute for Software Systems. We research ways for operators to understand what's going on in their live distributed system, to troubleshoot problems when they occur at runtime, and to design systems that proactively avoid problems. One approach we take is to design

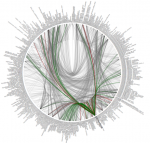

distributed tracing tools that can be used by the system operators. The goal of distributed tracing is to record information about what a program does while it's running. The tools record events, metrics, and performance counters, which together expose the current state and performance of the system, and how it changes over time. A key additional step taken by distributed tracing tools is to record the

causal ordering of events happening in the system — that is, the interactions and dependencies between machines and processes. Causal ordering is is very useful for diagnosing problems that span multiple processes and machines, especially when there might be lots of concurrent, unrelated activity going on at the same time. It lets us reconstruct the end-to-end execution paths of requests, across all components and machines, and then reason about the sequence of conditions and events that led up to a problem. Without causal ordering, this information is missing, and pinpointing the root cause of a problem would be like searching for a needle in a haystack.

The

Cloud Software Systems Research Group has looked at a number of challenges in making distributed tracing tools efficient, scalable, and more widely deployable. In our recent work, we have thought about how you can efficiently insert instrumentation to record entirely new information, into an already-running system, without having to rebuild or restart the system [1]. We have looked at problems in dealing with the large volume of data generated by distributed tracing tools, and deciding which data is most valuable to keep if there's not enough room to keep it all [2]. We have also considered the implications of distributed tracing at extremely large scale, and how to efficiently collect, aggregate, and process tracing data in real-time [3].

In our ongoing work, we are investigating ways for the data recorded by tracing tools to feed back in to decisions made by datacenter infrastructure, such as resource management, scheduling, and load balancing. We are also considering new challenges that arise in scalable data analysis: how do you analyze large datasets of traces and derive insights about aggregate system behavior? One approach we are exploring uses techniques in representational machine learning, to transform richly annotated tracing data into a more tractable form for interactive analysis. More broadly, our group investigates a variety of approaches besides just distributed tracing tools, including ways to better design and develop the distributed systems in the first place. Ultimately, our goal is to make modern cloud systems easier to operate, understand, and diagnose.

References

[1] Jonathan Mace, Ryan Roelke, and Rodrigo Fonseca. Pivot Tracing: Dynamic Causal Monitoring for Distributed Systems. In Proceedings of the 25th ACM Symposium on Operating Systems Principles (SOSP '15), 2015.

[2] Pedro Las-Casas, Jonathan Mace, Dorgival Guedes, and Rodrigo Fonseca. Weighted Sampling of Execution Traces: Capturing More Needles and Less Hay. In Proceedings of the 9th ACM Symposium on Cloud Computing (SoCC'18), 2018.

[3] Jonathan Kaldor, Jonathan Mace, Michał Bejda, Edison Gao, Wiktor Kuropatwa, Joe O'Neill, Kian Win Ong, Bill Schaller, Pingjia Shan, Brendan Viscomi, Vinod Venkataraman, Kaushik Veeraraghavan, Yee Jiun Song. Canopy: An End-to-End Performance Tracing And Analysis System. In Proceedings of the 26th ACM Symposium on Operating Systems Principles (SOSP '17), 2017.